3.4 Descriptions of Spectroscopic Calibration Steps

This section provides a more detailed description of the calibration processing steps and algorithms applied by calcos (v3.2.1 and later), including the switches, reference file inputs, science file inputs, and the output products. Setting the calibration switch to PERFORM enables the execution of the corresponding pipeline calibration task while setting to OMIT will cause that task to be skipped.

Future modifications and updates to calcos will be announced in STScI Analysis Newsletters (STANs), calcos release notes, and documented at the COS Web site:

http://www.stsci.edu/hst/instrumentation/cos/documentation/stsci-analysis-newsletter-stan

http://www.stsci.edu/hst/instrumentation/cos/documentation/calcos-release-notes

http://www.stsci.edu/hst/instrumentation/cos

To provide a concrete example, the calibration steps for FUV TIME-TAG data in the pipeline processing flow are described next. When present, each sub-section header begins with the switch that activates the module.

3.4.1 Initialization

When the pipeline is initiated, it first opens an association file to determine which files should be processed together. For TIME-TAG data (but not ACCUM data), it also creates a corrtag file before anything else is done. The initial contents of this file are simply a copy of the rawtag file, except that new columns have been added to the corrtag file. It is then updated throughout the running of the pipeline.

3.4.2 BADTCORR: Bad Time Intervals

This module flags time intervals in TIME-TAG data that have been identified as bad.

- Reference file:

BADTTAB Input files:

rawtag- Header keywords updated:

EXPTIME,EXPTIMEAandEXPTIMEB(for FUV data),NBADT, orNBADT_AandNBADT_B(number of events flagged for NUV or FUVA and B, respectively) andTBADTorTBADT_AandTBADT_B(time lost to bad events in NUV or FUVA and FUVB, respectively).

The BADTTAB table lists zero or more intervals of time which will be excluded from the final spectrum for various reasons. This file is currently empty (as of May 2018), but it could be populated by the COS team if events occur on orbit which render data collected during specific time intervals not scientifically useful. It is also available for the convenience of the user. For example, the user may wish to eliminate observations obtained in the daytime portion of the orbit to minimize airglow contamination, or they may want to isolate a certain portion of an exposure. In these cases, modifying BADTTAB may be the most convenient means to accomplish this. Events in the rawtag file having times within any bad time interval in BADTTAB are flagged in the DQ column of the corrtag table with data quality = 2048. The exposure time is updated to reflect the sum of the good time intervals, defined in Section 2.4.1. This step applies only to TIME-TAG data.

3.4.3 RANDCORR: Add Pseudo-Random Numbers to Pixel Coordinates

This module adds a random number between –0.5 and +0.5 to each x and y position of a photon detected by the FUV detectors.

- Reference file: none

- Input files:

rawtag,rawaccum - Header keywords updated:

RANDSEED

For FUV TIME-TAG data RANDCORR adds random numbers to the raw coordinates of each event, i.e.:

| \mathrm{XCORR = RAWX + Δx} \\ \mathrm{YCORR = RAWY + Δy} |

Where Δx and Δy are uniformly distributed, pseudo-random numbers in the interval –0.5 < Δx, Δy ≤ +0.5.

The result of this operation is to convert the raw integer pixel values into floating point values so that the counts are smeared over each pixel's area.

For FUV ACCUM data, a pseudo TIME-TAG list of x and y values is created with an entry for each recorded count. Next, a unique Δx and Δy are added to each entry.

If the RANDSEED keyword in the raw data file is set to its default value of –1, the system clock is used to create a seed for the random number generator. This seed value is then written to the RANDSEED keyword in the output files. Alternatively, an integer seed (other than –1) in the range –2147483648 to +2147483647 can be specified by modifying the RANDSEED keyword in the raw data file. Doing so will ensure that identical results will be obtained on multiple runs.

RANDCORR is only applied to events in the active area of the detector, as defined in the BRFTAB. Stim pulses, for example, do not have this correction applied.

3.4.4 TEMPCORR: Temperature-Dependent Distortion Correction

This module corrects for linear distortions of the FUV detector coordinate system that are caused by changes in the temperature of the detector electronics.

- Reference file:

BRFTAB - Input files:

rawtag,rawaccum - Header keywords updated: none

The FUV XDL detector has virtual, not physical, detector elements that are defined by the digitization of an analog signal. The charge packet associated with a photon event is split and transported to opposite sides of the detector where the difference in travel time of the two packets determines the location of the photon event on the detector. Since the properties of both the delay line and the sensing electronics are subject to variations as a function of temperature, apparent shifts and stretches in the detector format can occur.

To measure the magnitude of this effect, electronic pulses (Figure 1.7) are recorded at two reference points in the image ("electronic stim pulses") at specified time intervals throughout each observation. TEMPCORR first determines the locations and separations of the recorded stim pulse positions and then compares them to their expected locations in a standard reference frame (as defined in columns SX1, SY1, SX2, and SY2 of the BRFTAB file). The differences between the observed and reference stim pulse positions are used to construct a linear transformation between the observed and reference frame locations for each event (or pseudo-event in the case of ACCUM data). TEMPCORR then applies this transformation to the observed events, placing them in the standard reference frame. The stim pulse parameters are written to the file headers using the keyword names described in Table 2.15. In cases where one of the stim pulses falls off the active area of the detector, calcos assumes it is in its normal position and outputs a warning before continuing with calibration. This may significantly affect the reliability of the wavelength scale.

3.4.5 GEOCORR and IGEOCORR: Geometric Distortion Correction

This module corrects geometric distortions in the FUV detectors.

Reference file:

GEOFILE- Input files:

rawtag,rawaccum - Header keywords updated: none

The GEOCORR module corrects for geometric distortions due to differences between the inferred and actual physical sizes of pixels in the FUV array (ground measurements indicated that geometric distortions in the NUV MAMA are negligible). It produces a geometrically-corrected detector image with equally sized pixels. This is done by applying the displacements listed in the reference file, GEOFILE, which lists the corrections in x and y for each observed pixel location. The geometric distortion varies across the detector, and the GEOFILE gives the distortion only at the center of each pixel.

If IGEOCORR is set to 'PERFORM' (the default), the displacements to correct the distortion at (XCORR, YCORR) will be interpolated to that location, which includes a fractional part (even before geometric correction) due to TEMPCORR and RANDCORR. If IGEOCORR is 'OMIT,' the correction will be taken at the nearest pixel to (XCORR, YCORR).

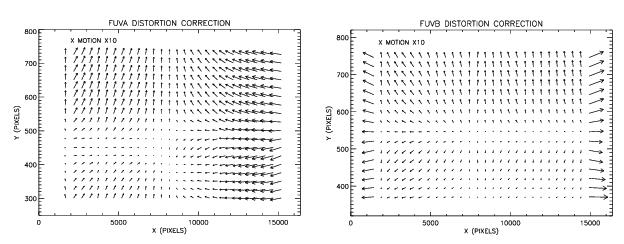

GEOFILE was created by using a ray-trace analysis of the COS FUV optical system. A set of wavelength calibration exposures was taken while stepping the aperture mechanism in the cross-dispersion direction to create an image of dispersed line profiles. The ray trace and measured line positions were compared to determine the shift between the measured (uncorrected) and predicted (corrected) line positions (see Figure 3.7).

The distortion corrections are given as images in the GEOFILE in the following order:

- Extension 1 contains an image of the X distortions for the FUVA

- Extension 2 contains an image of the Y distortions for the FUVA

- Extension 3 contains an image of the X distortions for the FUVB

- Extension 4 contains an image of the Y distortions for the FUVB

3.4.6 DGEOCORR: Delta Geometric Distortion Correction

This module further corrects geometric distortions in the FUV detectors in addition to normal geometric correction (GEOCORR). This module is designed to run after GEOCORR and to operate in the same manner, but cannot be done without first performing GEOCORR.

Reference file:

DGEOFILE- Input files:

rawtag,rawaccum Header keywords updated: none

The DGEOCORR module is designed to improve upon the GEOCORR corrections by removing residual geometric distortions that remain after the geometric correction has been applied. The correction is done by applying the displacements listed in the reference file, DGEOFILE, which lists the corrections in x and y for each observed pixel location.

If IGEOCORR is 'PERFORM' (the default), the displacements to correct the distortion at (XCORR, YCORR) will be interpolated to that location. If IGEOCORR is 'OMIT,' the correction will be taken at the nearest pixel to (XCORR, YCORR). Note that the use or omission of IGEOCORR applies to both GEOCORR and DGEOCORR as it is not appropriate to use IGEOCORR for only GEOCORR or DGEOCORR. DGEOCORR cannot be set to 'PERFORM' unless GEOCORR is also set to 'PERFORM', as DGEOCORR assumes that the GEOCORR has already been performed.

The DGEOFILE distortion corrections are in the same format to those of the GEOFILE and are given as images in the following order:

Extension 1 contains an image of the X distortions for the FUVA

- Extension 2 contains an image of the Y distortions for the FUVA

- Extension 3 contains an image of the X distortions for the FUVB

- Extension 4 contains an image of the Y distortions for the FUVB

3.4.7 XWLKCORR, YWLKCORR: Walk Correction

These modules correct for the fact that the reported position of events on the FUV XDL detector is a function of pulse height (an effect known as walk). XWLKCORR corrects for walk effects in the X (dispersion) axis; YWLKCORR corrects for walk in the Y (cross-dispersion) direction.

- Reference files:

XWLKFILE,YWLKFILE - Input file:

rawtag - Header keywords updated: none

The file formats for the XWLKWFILE and YWLKFILE are identical. Each contains two binary extensions with 16384 × 32 IMAGE extensions. One extension (EXTNAME = 'FUVA') is used to correct the data on Segment A, and the other (EXTNAME = 'FUVB') is used for Segment B. These images are used as lookup tables, with the columns (0–16383) of each table corresponding to the thermally and geometrically corrected X-detector pixel, and the rows of each table corresponding to the pulse height value (0–31). Note that both X- and Y-walk corrections are only a function of the X pixel and independent of Y.

Since the X-coordinate is a floating point value after thermal and geometric corrections, the correction is found by interpolating the adjacent values found in the table. The calculated value is then subtracted from X or Y to give the final, corrected location.

In reality, the walk and geometric distortions occur in parallel in the detector, i.e., the raw (x,y) position reported by the electronics is a function of both position and pulse height. In fact, an alternative way of thinking about these effects is that the detector has a different geometric correction at each PHA value. However, because of the way the data used for the geometric correction was obtained, and for simplicity in implementation, the two are done serially in calcos.

The format of the walk correction was changed significantly in calcos version 3.2.1. Prior to that version, a polynomial in X and PHA was used to apply the correction using the WALKCORR routine. However, that method did not provide a fine enough correction with polynomials of reasonable order.

3.4.8 DEADCORR: Non-linearity Correction

This module corrects for count rate-dependent non-linearities in the COS detectors, also known as the "dead time" correction.

- Reference file:

DEADTAB Input files:

rawtag,rawaccum,images- Header keywords updated: none

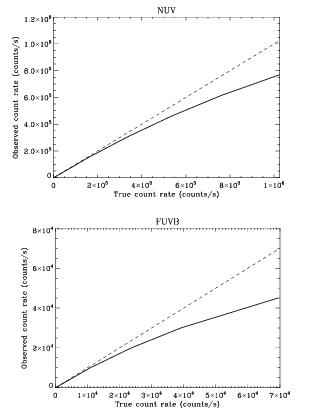

DEADCORR corrects for non-linear photon detection in the COS detector electronics. Both the FUV and NUV detector electronics have a small temporal overhead when counting events. This overhead becomes noticeable when the count rates become large.

The efficiency of the detector's photon counting is calculated as the ratio of the true count rate and the observed count rate. This value is referred to as the deadtime. The deadtime for each detector is modeled and the reference file DEADTAB contains a lookup table of the correction for various count rates. Figure 3.8 shows how the measured count rates deviate from the actual count rates as a function of the actual count rate for the NUV detector, and segment B of the FUV detector (the FUV segment A curve is nearly identical).

TIME-TAG data the deadtime correction is computed every 10 seconds. The observed count rate is the number of events within that time interval, and the deadtime factor is determined by interpolation within the values in DEADTAB. The values in the EPSILON column in the corrtag file for events within that time interval will then be divided by the deadtime factor. For ACCUM data the observed average count rate taken from a header keyword for the digital event counter is used. The deadtime factor is then found by interpolation within the DEADTAB, the same as for TIME-TAG data, and the science and error arrays divided by the deadtime factor. The deadtime correction parameters are written to the file headers using the keyword names described in Table 2.15.3.4.9 PHACORR: Pulse Height Filter

This module operates on FUV data and flags events whose pulse heights are outside of nominal ranges.

Reference file:

PHATAB,PHAFILEInput files:

rawtag,rawaccumHeader keywords updated:

NPHA_A,NPHA_B,PHAUPPRA,PHAUPPRB,PHALOWRA,PHALOWRB

This module works differently for FUV TIME-TAG and ACCUM data. It is not used for NUV data.

For FUV TIME-TAG data, each event includes a 5 bit (0–31) Pulse Height Amplitude (PHA). The value of the pulse height is a measure of the charge produced by the microchannel plate stack, and can be used to identify events which are likely due to cosmic rays or detector background. The PHATAB reference file lists lower and upper pulse height thresholds expected for valid photon events for each detector segment. The PHACORR module compares each event's pulse height to these thresholds, and if the pulse height is below the Lower Level Threshold (LLT) or above the Upper Level Threshold (ULT), the event is flagged in the DQ column of the corrtag table with a data quality bit of 512. The upper and lower thresholds are also written to the PHALOWRA (PHALOWRB) and PHAUPPRA (PHAUPPRB) keywords in the output data files for segment A (B), while the number of events flagged is written to the NPHA_A and NPHA_B keywords.

Default values of the lower (LLT) and upper (ULT) thresholds have been chosen based on the properties of the detector and are implicit in data used when generating other reference files (e.g., FLUXTAB).

With continuing exposure to photons, pulses from the micro channel plates (MCPs) have smaller amplitudes, a phenomenon known as "gain sag." As this occurs, the thresholds in the PHATAB may be updated to maximize the number of real events counted. Which PHATAB is used for data collected at a particular time will be handled by the USEAFTER date keyword in the calibration file header.

The PHAFILE reference file is an alternative to the PHATAB, and allows pulse-height limits to be specified on a per-pixel basis rather than a per-segment basis. The PHAFILE has a primary header and four data extensions, consisting of the FUVA PHA lower limits, FUVA PHA upper limits, FUVB PHA lower limits, and FUVB PHA upper limits respectively. The use of a PHAFILE instead of a PHATAB (if both are specified and PHACORR=PERFORM, the PHAFILE will take precedence) allows a number of adjustments, including (for example) the use of a lower PHA threshold in gain-sagged regions, thus allowing more background events to be filtered out while still continuing to detect photon events in gain-sagged regions. As of May 2018, no PHAFILE has been produced by the COS team, but in the future one or more such files may be produced for use with FUV TIME-TAG data. Note that the use of a PHAFILE requires calcos 2.14 or later.

For FUV ACCUM data, pulse height information is not available for individual events. However, a 7 bit (0–127) Pulse Height Distribution (PHD) array, containing a histogram of the number of occurrences of each pulse height value over the entire detector segment, is created onboard for each exposure. PHACORR compares the data in this pha file to the values in the PHATAB file. Warnings are issued if the peak of the distribution (modal gain) does not fall between the scaled values of LLT and ULT; or if the average of the distribution (mean gain) does not fall between the MIN_PEAK and MAX_PEAK values in PHATAB. The PHALOWRA and PHAUPPRA, or PHALOWRB and PHAUPPRB keywords are also populated in the output files with the LLT and ULT values from the PHATAB.

3.4.10 DOPPCORR: Correct for Doppler Shift

This module corrects for the effect that the orbital motion of HST has on the arrival location of a photon in the dispersion direction.

Reference files:

DISPTAB,XTRACTABorTWOZXTABInput files:

rawtag,rawaccum- Header keywords updated: none

During a given exposure the photons arriving on the FUV and NUV detectors are Doppler shifted due to the orbital motion of HST. The orbital velocity of HST is 7.5 km/s, so spectral lines in objects located close to the orbital plane of HST can be broadened up to 15 km/s, which can be more than a resolution element.

DOPPCORR corrects for the orbital motion of HST. It operates differently on TIME-TAG and ACCUM files:

For TIME-TAG files the raw events table contains the actual detector coordinates of each photon detected, i.e., the photon positions will include the smearing from the orbital motion. In this case DOPPCORR will add an offset to the pixel coordinates (the XCORR column) in the events table to correct for this motion. The corrected coordinates are written to the column XDOPP in the corrtag file for both FUV and NUV data.

For ACCUM files the Doppler correction is applied onboard and is not performed by calcos. This means, however, that the pixel coordinates of a spectral feature can differ from where the photon actually hit the detector—a factor which affects the data quality initialization and flat-field correction. Therefore for ACCUM images DOPPCORR shifts the positions of pixels in the bad pixel table BPIXTAB to determine the maximum bounds that could be affected. It is also used to convolve the flat-field image by an amount corresponding to the Doppler shift which was computed on orbit. The information for these calculations are contained in the following header keywords:

- DOPPONT: True if Doppler correction was done onboard.

- ORBTPERT: Orbital period of HST in seconds.

- DOPMAGT: Magnitude of the Doppler shift in pixels.

- DOPZEROT: Time (in MJD) when the Doppler shift was zero and increasing.

The "T" suffix at the end of each of these keywords indicates that they were derived from the onboard telemetry, whereas the other keywords described below were computed on the ground from the orbital elements of HST. The two sets of keywords can differ by a small amount, but they should be nearly identical.

DOPPCORR assumes that the Doppler shifts vary sinusoidally with time according to the orbital movement of HST. The following keywords are used to perform the correction and are obtained from the first extension (EVENTS) in the rawtag:

EXPSTART– start time of the exposure (MJD)DOPPZERO– the time (MJD) when the Doppler shift was zero and increasing (i.e., when HST was closest to the target)DOPPMAG– The number of pixels corresponding to the Doppler shift (used only for shifting the data quality flag arrays and flat fields)ORBITPER– the orbital period of HST in seconds

The data columns used in the correction are TIME (elapsed seconds since EXPSTART) and RAWX (position of photon along dispersion direction). The Doppler correction to be applied is then

| \mathrm{SHIFT =\, –(DOPPMAG\, V/(c*d))*λ(XCORR)*sin(2*\pi*t/ORBITPER)~,} |

where DOPPMAGV is the Doppler shift magnitude including the pointing direction of HST, c is the speed of light (km/s), d is the dispersion of the grating used in the observation (Å/pixel), λ(XCORR) is the wavelength at the XCORR position being corrected (obtained from the dispersion solution for that grating and aperture in the DISPTAB reference file) and t is defined as

| \mathrm{ t = (EXPSTART – DOPPZERO)*86400 + TIME}~, |

where the factor of 86400 converts from days to seconds.

3.4.11 FLATCORR: Flat-field Correction

This module corrects for pixel-to-pixel non-uniformities in the COS detectors.

Reference file:

FLATFILEInput files:

rawtag,rawaccum,images- Header keywords updated: none

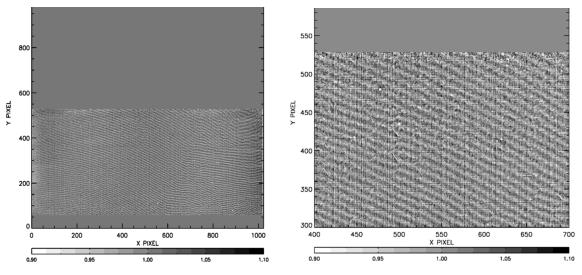

The FLATCORR step corrects for pixel-to-pixel sensitivity differences across the detector. It uses a flat-field image located in the file specified by the FLATFILE header keyword. Figure 3.9 shows an NUV flat. For spectroscopic data, any wavelength dependence of the detector response or remaining low frequency spatial variations are removed by the flux calibration step (FLUXCORR, Section 3.4.20). Flat fielding is performed in geometrically corrected space, and because the pixel-to-pixel variations should be largely wavelength independent, only one reference image is used per detector or detector segment (NUV, FUVA, and FUVB). The flat-field correction is applied differently for TIME-TAG and ACCUM mode data for both spectroscopic and imaging modes.

For spectroscopic TIME-TAG exposures, each photon in the events list is individually corrected. In the corrtag file, the photon weight in the EPSILON column is divided by the flat-field value at the event’s detector location rounded to the nearest pixel (XCORR, YCORR for FUV; RAWX, RAWY for NUV).

For spectroscopic ACCUM mode data, photons are summed into an image onboard by the COS electronics. To compensate for the motion of HST during the observation, spectroscopic exposures are taken with Doppler compensation performed during the accumulation (science header keyword DOPPONT=TRUE). During Doppler compensation, photon locations are shifted as the data are received, and the underlying flat field at each imaged pixel is an average of the original pixel position sensitivities. FLATCORR replicates this averaging for the flat-field correction using the same control parameters as those onboard (DOPPMAGT, DOPZEROT, ORBTPERT) if DOPPCORR=PERFORM (Section 3.4.10). The convolved flat-field image is applied to the EPSILON column in the pseudo-corrtag file.

NUV images using the mirrors are not Doppler corrected. In this case, DOPPCORR=OMIT, and the input data are divided by the flat field without convolution.

For both the flt and counts files, error arrays are created based on counting statistics (Section 2.7), but they are not used in further processing.

It was discovered on-orbit that the NUV suffers some vignetting. This causes a structure in "pixel space," affecting roughly the first 200 pixels of all three spectral stripes by as much as 20%. The NUV flat field was originally modified to correct for this effect, but variation in the vignetting caused sufficient errors that the vignetting is no longer included in the NUV flat field. Work continues on grating-specific vignetting corrections.

For the FUV channels, the ground flats proved inadequate. Consequently, the current FUV flats correct primarily for the effects of grid wires and low order flat field variations (see COS ISR 2013-09 and COS ISR 2016-15).

3.4.12 WAVECORR: Wavecal Correction

For spectroscopic data, this module determines the location of the wavelength calibration spectrum on the detector relative to a template, and then applies zero point shifts to align the wavecal and the template.

Reference files:

LAMPTAB,WCPTAB,DISPTAB,XTRACTABorTWOZXTABInput files:

rawtag,rawaccum- Header keywords updated:

SHIFT1[A-C], SHIFT2[A-C], LMP_ONi, LMPOFFi, LMPDURi, LMPMEDi. Creates

lampflashfile for TAGFLASH data.

The wavecal step of calcos determines the shift of the 2-D image on the detector along each axis resulting from thermal motions and drifts within an OSM (Optics Select Mechanism) encoder position. This step applies only to spectroscopic data, TIME-TAG and ACCUM, for both the FUV and NUV detectors. The shifts are determined from one or more contemporaneous wavelength calibration observations of a spectral line lamp (wavecal) which must be obtained without moving the OSM between the science and wavecal exposures.

There are four types of wavecals, as described in the COS IHB sec. 5.7. For ACCUM data the spectrum of the calibration lamp is contained in an exposure that is separate from that of the science (AUTO or GO wavecals). For TIME-TAG data the wavecals can also be separate exposures, but the default when observing with the PSA aperture is TAGFLASH mode. In the TAGFLASH mode the line lamp is turned on and off (flashed) one or more times during each science exposure, producing a wavecal spectrum that is offset in the cross-dispersion direction from the science spectrum (See Figure 1.9, and Figure 1.10). The algorithm used to determine the shifts is the same in either case, but the way that the shift is determined at the time of the observation differs. Thus, we begin by describing how the offsets are found.

Determining the offsets of the wavecal spectra:

For each wavecal, the location of the spectrum in the cross-dispersion direction is determined by collapsing the spectrum along the dispersion direction using the extraction slope defined in the XTRACTAB table (SLOPE). The location of the brightest pixel, after boxcar smoothing, is taken as the spectrum location and that location is compared to the nominal position defined in the XTRACTAB table (B_SPEC). The offsets from nominal positions for segments A and B (FUV) or stripes A, B, and C (NUV) are recorded in the lampflash file (which is created at this stage) in the SHIFT_XDISP field. The two FUV segments are processed independently. Cross-dispersion shifts are determined for each NUV stripe and then the average is computed and applied to all three stripes. The sign of the SHIFT_XDISP entry is positive if the spectrum was found at a larger pixel number than the nominal location.

To determine the offsets in the dispersion direction, the wavecal spectrum is collapsed along the cross-dispersion direction and compared to the template wavecal (LAMPTAB) taken with the same grating, central wavelength, and FPOFFSET. For the NUV, wavecal spectra offsets for each stripe are determined independently. The line positions are determined from a least squares fit to a shifted and scaled version of the template spectrum. The maximum range for shifting the wavecal and template wavecal spectra is defined by the value of XC_RANGE in the WCPTAB table. Calcos takes into account the FP-POS of the wavecal spectrum by shifting it by FP_PIXEL_SHIFT (from the column in the LAMPTAB) where FP_PIXEL_SHIFT=0 for FP-POS=3 and all other FP-POS settings are shifted to the FP-POS=3 position before fitting them to the template wavecal. The final shift is stored as SHIFT_DISP in the lampflash file and the minimum value of chi squared is stored in the CHI_SQUARE array.

Applying the offsets to the science spectra:

The way the offsets are applied to the spectral data depends on whether the data were obtained with AUTO or GO wavecals or with TAGFLASH wavecals. For AUTO or GO wavecals, the wavecals are obtained at different times than the spectral data and temporal interpolation is done to determine the appropriate shifts. For TAGFLASH data, the wavecals are interspersed with the spectral data, allowing more precise and, consequently, more intricate corrections to be made. In either case, the result is saved in the X[Y]FULL entries in the corrtag file. Because the corrections can be time dependent, the differences between X[Y]CORR and X[Y]FULL can also be time dependent. This step of the calibration amounts to a time dependent translation of the detector coordinate system to a coordinate system relative to the wavecal spectrum, which is more appropriate for wavelength calibration.

AUTO or GO wavecals

For ACCUM science exposures which are bracketed by AUTO or GO wavecal observations, the shifts determined from the bracketing wavecal exposures are linearly interpolated to the middle time of the science observation, and the interpolated values are assigned to the SHIFT1[A-C] (dispersion direction) and SHIFT2[A-C] (cross-dispersion direction) keywords in the science data header. If there is just one wavecal observation in a dataset, or if there are more than one but they don’t bracket the science observation, the SHIFT1[A-C] and SHIFT2[A-C] keywords are just copied from the nearest wavecal in the association to the science data header.

For non-TAGFLASH TIME-TAG science exposures bracketed by AUTO or GO wavecal observations, the shifts determined from the wavecals are interpolated (linearly) so that each event in the corrtag file is shifted according to its arrival time. The SHIFT1[A-C] and SHIFT2[A-C] keywords recorded in the science data header are in this case the averages of the values applied. As in the ACCUM case, if there is only one wavecal observation in a dataset, or if there are more than one but they do not bracket the science observation, the SHIFT1[A-C] and SHIFT2[A-C] keywords are just copied from the nearest wavecal to the science data header.

TAGFLASH DATA

A TAGFLASH wavecal is a lamp exposure that is taken concurrently with a TIME-TAG science exposure, and the photon events for both the wavecal lamp and the science target are mixed together in the same events table. In many respects, TAGFLASH wavecals are handled differently from conventional wavecals.

The nominal start and stop times for each lamp flash are read from keywords in the corrtag table. The actual start and stop times can differ from the nominal times, so calcos determines the actual times (restricted to being within the nominal start-to-stop intervals) by examining the number of photon events within each 0.2-second interval in the wavecal region defined in the XTRACTAB table. A histogram of the count rate is constructed. The histogram is expected to have one peak near zero, corresponding to dark counts, and another at high count rate, due to the lamp illumination. The average count rate when the lamp is on is taken to be the count rate for the second peak of the histogram. The lamp turn-on and turn-off times are taken to be the times when the count rate rises above or sinks below half the lamp-on count rate.

Calcos uses the time of the median photon event within a lamp turn-on and turn-off interval as the time of the flash. The keywords LMP_ONi and LMP_OFFi (i is the one-indexed flash number) are updated with the actual turn-on and turn-off times, in seconds, since the beginning of the science exposure. The keywords LMPDURi and LMPMEDi are updated with the actual duration and median time of the flash.

As before, the cross dispersion location of each wavecal spectrum is determined by collapsing it along the dispersion direction and comparing it with the template in the XTRACTAB table to produce the SHIFT_XDISP entries in the lampflash file. The wavecal spectrum is then collapsed along the cross-dispersion direction to produce a 1-D spectrum that is fit to the template spectrum to obtain the SHIFT_DISP entries.There is one row in the lampflash table for each flash. Typically there will be more than one wavecal flash during a science exposure; so the shifts will be piece-wise linearly interpolated between flashes. The SHIFT1[A-C] and SHIFT2[A-C] values that are recorded in the science data header are the average of the shift values found from the different flashes.

SPLIT Wavecals at LP6

Due to a light leak through the flat-field calibration aperture, wavecal lamps cannot be flashed on during a science exposure at LP6. Therefore, science exposures at LP6 are essentially bracketed by AUTO or GO wavecals, and the process for applying the offset to the science spectra is similar to that for AUTO or GO wavecals described above. However, for exposures longer than 960s, calcos will apply an additional wavelength shift, the magnitude of which has been determined by empirically modeling COS data. This is done to simulate a lamp flash that is typically taken for such longer length exposures. There is an estimated increase in the wavelength uncertainty of < 0.5 pixels for LP6 science exposures with this additional wavecal shift applied.

Additional Functions

WAVECORR also corrects the flt and counts files which result from both ACCUM and TIME-TAG science data for the offsets in the dispersion and cross-dispersion directions. However, since these images are in pixel space they can only be corrected by an integer number of pixels. The flt and counts images are corrected by the nearest integer to SHIFT1[A-C] and SHIFT2[A-C]. DPIXEL1[A-C] is the average of the difference between XFULL and the nearest integer to XFULL, where XFULL is the column by that name in the corrtag table. This is the average binning error in the dispersion direction when the flt and counts images are created from the corrtag table. DPIXEL1[A-C] is zero for ACCUM data. This shift is used when computing wavelengths during the X1DCORR step.

3.4.13 BRSTCORR: Search for and Flag Bursts

This module flags "event bursts" in the FUV TIME-TAG data for removal.

Reference file:

BRSTTAB,BRFTAB,XTRACTABorTWOZXTAB- Input files:

rawtag - Header keywords updated:

TBRST_A,TBRST_B(time affected by bursts in segments A and B),NBRST_A,NBRST_B(number of events flagged as bursts in segments A and B),EXPTIME,EXPTIMEA,EXPTIMEB.

The COS FUV detectors are of the same design as the detectors used on the FUSE mission. The FUSE detectors were seen to experience sudden, short-duration increases in counts while collecting data. These events, called bursts, led to very large count rates and occurred over the entire detector. Thus far, no bursts have been recorded on-orbit for the COS FUV detectors, and the default setting for BRSTCORR is OMIT. Nevertheless, it is possible that COS will exhibit similar bursts at some point, and so the BRSTCORR module remains in the pipeline and is available to identify bursts and flag their time intervals should they occur. This module can only be applied to FUV TIME-TAG data.

The first step in the screening process is to determine the count rate over the whole detector, including stim pulses, source, background, and bursts. This rate determines which time interval from the BRSTTAB table to use for screening.

Screening for bursts is then done in two steps. The first step identifies large count rate bursts by calculating the median of the counts in the background regions, defined in the XTRACTAB reference file, over certain time intervals (DELTA_T or DELTA_T_HIGH for high overall count rate data). Events with count rates larger than MEDIAN_N times the median are flagged as large bursts.

The search for small count rate bursts is done iteratively, up to MAX_ITER. This step uses a boxcar smoothing of the background counts (taking the median within the box) and calculates the difference between the background counts and the running median. The boxcar smoothing is done over a time interval MEDIAN_DT or MEDIAN_DT_HIGH. Elements that have already been flagged as bursts are not included when computing the median. For an event to be flagged as affected by a small burst the difference between the background counts and the running median has to be larger than the following quantities:

- A minimum burst count value: BURST_MIN * DELTA_T (or DELTA_T_HIGH for large overall count rates),

- A predetermined number of standard deviations above the background: STDREJ * square_root(background counts),

- A predetermined fraction of the source counts: SOURCE_FRAC * source counts.

The source counts value in 3) is the number of events in the source region defined in the XTRACTAB table minus the expected number of background counts within that region.

All events that have been identified as bursts are flagged in the data quality column (DQ in the corrtag table) with data quality bit = 64. In addition calcos updates the following header keywords to take into account time and events lost to burst screening: TBRST_A and TBRST_B (time lost to bursts in segments A and B); NBRST_A, NBRST_B (number of events lost to bursts in segments A and B), EXPTIME, EXPTIMEA and EXPTIMEB.

When running calcos a user can specify that the information about bursts be saved into a file. This output text file contains four columns, each with one row per time interval (DELTA_T or DELTA_T_HIGH). Column 1 contains the time (seconds) at the middle of the time interval, column 2 contains the background counts for that time interval, column 3 contains a 1 for time intervals with large bursts and is 0 elsewhere, and column 4 contains a 1 for time intervals with small bursts and is 0 elsewhere.

Note: Although a systematic study has not been performed, as of May 2018, no bursts have been detected.

3.4.14 TRCECORR: Apply Trace Correction

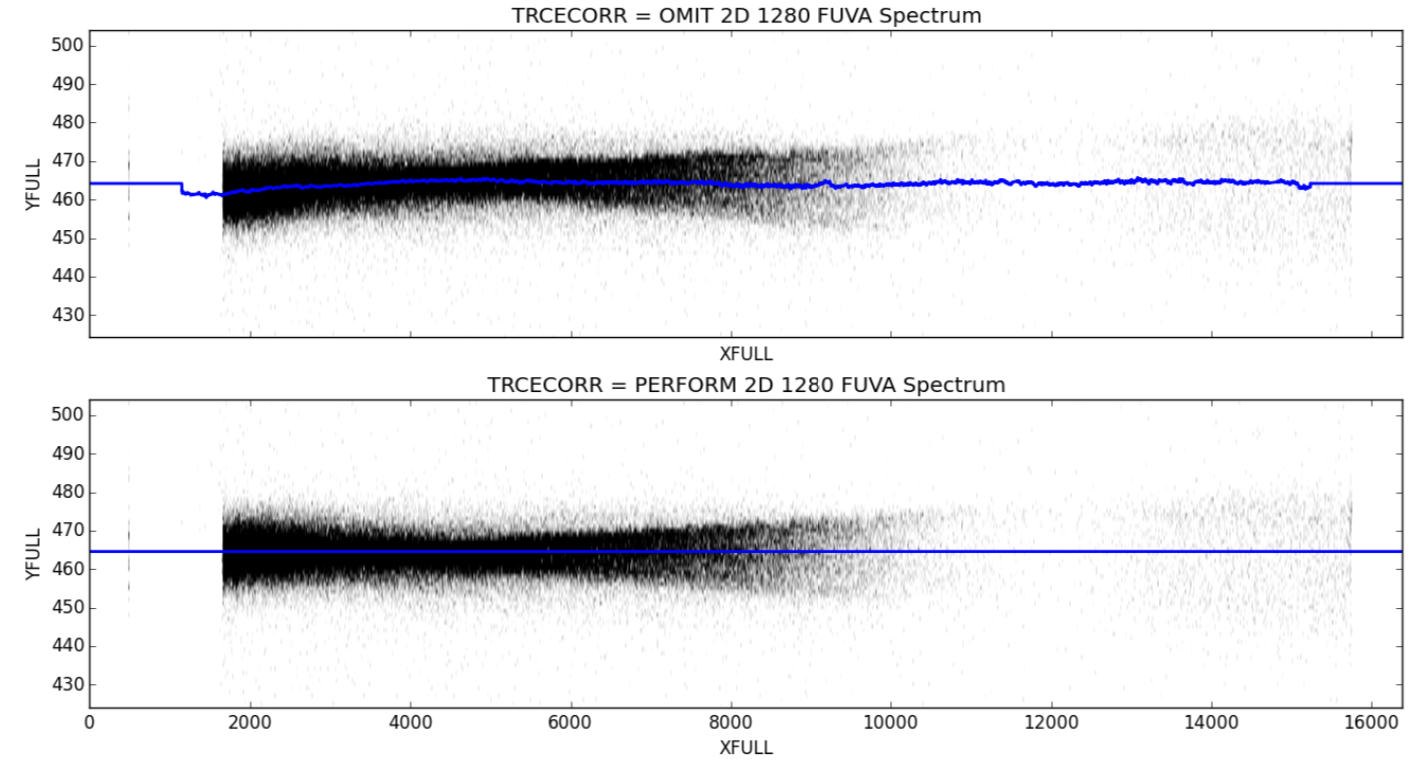

This module corrects for the fact that the spectral trace from a target in the FUV channel is not completely straight, but wanders up and down by several pixels over the full wavelength range due to uncorrected detector distortions.

Reference file:

TRACETABInput files:

rawtag,rawaccumHeader keywords updated: none

Even after the geometric distortion correction is applied in the FUV channel, there remains some residual distortion that shows up as an unevenness of the spectral trace as a function of wavelength.

TRCECORR uses a TRACETAB reference file, which is a FITS table containing a row for each valid combination of {SEGMENT, OPT_ELEM, APERTURE, CENWAVE}. SEGMENT can be FUVA or FUVB (the trace correction is not performed for NUV data), OPT_ELEM can be any of the FUV gratings (G140L, G130M, G160M), APERTURE can be PSA or BOA (no trace correction is performed on the WCA aperture), and CENWAVE can be any valid value for its corresponding grating. The reference file is selected based on the value of the INSTRUME and LIFE_ADJ keywords. Currently, the trace correction is only performed on data with LIFE_ADJ=3 or greater (i.e., LP3 or greater).

Each row in the table contains a table of values of the trace correction for each integer value of XCORR (1–6384). The correction is applied by looping over all events in the corrtag file, linearly interpolating the trace correction at the (non-integer) value of XCORR, and subtracting this value from the YFULL value of the event. Only events that are inside the active area and outside the WCA aperture are corrected.

The spectral traces at LP3 for one CENWAVE of each of the COS FUV gratings are illustrated in Figure 3.10. We note that the traces at subsequent LP are similar. The effect of the TRCECORR reduction step for the 1280 setting of the G140L grating is shown in Figure 3.11.

Note: It is intended that the TRCECORR step be used together with the ALGNCORR step and the TWOZONE algorithm in X1DCORR. While it is possible to use TRCECORR with other combinations of settings, the resulting calibrated products may not be optimal. For more information on customized TWOZONE reductions see Section 3.6.3.

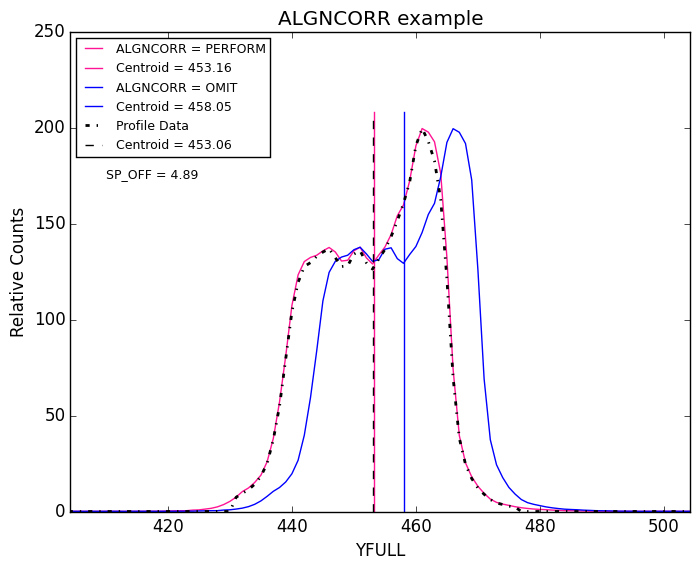

3.4.15 ALGNCORR: Alignment Correction

The ALGNCORR correction calculates and applies a constant shift to events to ensure that their centroid in the cross-dispersion direction is always the same as that of the reference profile in the PROFTAB reference file. The ALGNCORR step is intended to be used together with the TRCECORR step and with the TWOZONE algorithm in the X1DCORR step. Using ALGNCORR without TRCECORR or with the alternate BOXCAR algorithm of the X1DCORR step may produce unpredictable and poorly calibrated results.

Reference file:

PROFTAB,TWOZXTAB- Input files: rawtag,

rawaccum - Header keywords updated: SP_LOC_A, SP_LOC_B (vertical location of spectrum in segments A, B), SP_ERR_A, SP_ERR_B (Poisson uncertainty in location of spectrum in segments A, B), and SP_OFF_A, SP_OFF_B (vertical shift applied to events in segments A, B)

When using the TWOZONE extraction algorithm, it is important to make sure that the 2D spectral image is accurately centered on the reference profile, since the inner region of the reference profile is significantly narrower than the full BOXCAR extraction region. This is accomplished in the ALGNCORR step by accurately measuring the flux-weighted centroid of the spectral profile in the cross-dispersion direction, and comparing that centroid to that of a reference 2D point-source spectral profile in the PROFTAB reference file. The offset between these two centroids is applied to the YFULL values of each event, in the sense of moving the centroid of the science spectrum so that it matches that of the reference profile.

The PROFTAB reference file is selected based on the values of the INSTRUME (must be COS) and LIFE_ADJ keywords (currently there are only reference files for Lifetime Position 3 and up). It is a FITS table containing a row for each valid combination of {SEGMENT, OPT_ELEM, APERTURE, CENWAVE}. SEGMENT can be FUVA or FUVB (the alignment correction is not performed for NUV data), OPT_ELEM can be any of the FUV gratings (G140L, G130M, G160M), APERTURE can be PSA or BOA (no alignment correction is performed on the WCA aperture), and CENWAVE can be any valid value for its corresponding grating.

The calibration step works by first binning events in the corrtag file to create images of the counts in (XFULL, YFULL) space. A mask is then calculated and applied to the data to filter out data that would bias the centroid measurement. Such data include regions around strong airglow lines, plus any data from a column that contains a pixel whose DQ value contains any of the bits corresponding to the SDQFLAGS that are read from the data header. In calcos version 3.1, this was changed so that DQ values flagging gain sagged regions (DQ=8192) are not used to mask out columns, even when that DQ value is included in SDQFLAGS. This allows the alignment step to work properly even when very wide gain sagged regions exist in the wing of the profile.

The mean number of counts per pixel in the background regions for the valid columns is calculated, and this mean value is subtracted from each pixel in the 2D science spectrum. The location of the background regions is defined in the TWOZXTAB table. The 2D background-subtracted spectrum is then calculated along the dispersion direction (excluding columns with SDQFLAGS or containing airglow), and the flux-weighted centroid of the collapsed background-subtracted spectrum is calculated, along with its Poisson uncertainty. If this value differs from the current best value of the centroid by more than 0.005 pixels, the process is repeated with the background and science apertures shifted by the offset between the current and new centroid until convergence occurs.

Having calculated the flux-weighted centroid for the science data, the flux-weighted centroid of the reference profile is calculated using exactly the same mask for SDQFLAGS and the same background regions. The offset between the centroids of the science profile and the reference profile is applied to the YFULL values of every event that is inside the active area and outside the WCA aperture. The centroid, offset, and Poisson uncertainty are then written to the header of the science extension in keywords SP_LOC_A, SP_LOC_B, SP_OFF_A, SP_OFF_B, SP_ERR_A and SP_ERR_B.

The ALGNCORR correction is illustrated in Figure 3.12.

3.4.16 DQICORR: Initialize Data Quality File

This module identifies pixels which are suspect in some respect and creates the DQ extension for the flt and counts images.

- Reference file:

BPIXTAB,GSAGTAB,SPOTTAB,TRACETAB - Input files:

rawtag,rawaccum,images - Header keywords updated: none

The DQICORR step assigns DQ values to affected events, and creates a DQ image extension that is used in the extraction step. It uses the Bad Pixel Table (BPIXTAB), Gain Sag Table (GSAGTAB) and Hotspot Table (SPOTTAB) to identify the regions that are relevant for the exposure using the following conditions:

- BPIXTAB: all regions included

- GSAGTAB: regions included if the DATE of the gain sag region is before the start of the exposure and the HVLEVEL of the gain sag extension matches that of the exposure

- SPOTTAB: regions included if the temporal extent of the spot (given by the START and STOP times of the spot region) overlaps the good time intervals of the exposure.

The COS data quality flags are discussed in Section 2.7.2 and are listed in Table 2.19. Figure 3.13 shows examples of the types of regions isolated by the DQ flags and the effect they can have on an extracted spectrum. DQICORR proceeds differently for TIME-TAG and ACCUM mode exposures, but the flags in the flt and counts images are created similarly in preparation for spectral extraction. Consequently, we describe each mode separately.

TIME-TAG:

DQICORR compares the XCORR, YCORR pixel location of each event in the corrtag file to the relevant rectangular regions as described above. The value in the DQ column for that event is then updated with the flags of all the regions (if any) that contain that pixel location. When the flt and counts images are generated from the corrtag file, photons which arrived during bad times or bursts are omitted from the image and ERR array. For FUV data, events whose PHAs were flagged as out of bounds are omitted as well. However, data with spatial DQ flags are retained at this stage. The third FITS extension of the flt and counts files is an array of data quality values generated directly from the BPIXTAB, GSAGTAB, and SPOTTAB reference files. If DOPPCORR=PERFORM, the included locations are Doppler-smeared and the flags from all neighboring pixels that contribute to the flt and counts image pixels are combined.

ACCUM:

For ACCUM exposures, the rawaccum image file will already have a third FITS extension of data quality values if any pixel had been flagged when constructing the raw image (the third extension does not exist for TIME-TAG data). The extension will be a null image if all initial data quality flags are zero. This is the case for NUV data, but not for FUV. For FUV ACCUM exposures, photons are collected for only part of the detector segment and an initial data quality array is created to mark the pixels outside those subimage boundaries (flag=128, outside active area), so there will always be data flagged as missing When calcos creates the flt and counts images, it first converts the rawaccum image to a pseudo-time-tag table. In this table, the DQ column is updated with the DQ flags from BPIXTAB just as for the TIME-TAG data. In addition, the third extension of the flt and counts files contains a Doppler-smeared version of the BPIXTAB, GSAGTAB, and SPOTTAB reference files, but it also includes the initial flag assignments in the rawaccum DQ extension.

BPIXTAB according to the feature type, e.g., a "wrinkle" is a kind of detector flaw and grid wire is an example of a detector shadow. Dead Spots are also known as Low Response Regions.To select an alternative definition of SDQFLAGS, the user should modify the rawtag or rawaccum header and reprocess the file with calcos.

3.4.17 STATFLAG: Report Simple Statistics

This module computes some statistical measures that provide general information about COS science observations.

Reference file:

TWOZXTABorXTRACTAB,BRFTAB- Input files:

flt,counts,x1d,lamptab - Header keywords updated:

NGOODPIX,GOODMEAN,GOODMAX

STATFLAG enables the reporting of statistics for COS observations. STATFLAG is enabled by default for all science observations and operates on x1d, counts, and flt data products. STATFLAG is intended to provide a very basic statistical characterization of the events and locations on the detectors that are known to be good.

STATFLAG reports the following statistics:

- NGOODPIX: The number of good pixels or collapsed spectral columns. For the

countsandfltimages, this is the number of pixels in the spectral extraction or imaging region. For thex1dfile, each 'Y' column in the spectral extraction region of thefltfile is combined to produce the one-dimensional spectrum. The DQ of each column is the logical OR of the DQ flags of the individual pixels. Only collapsed spectral columns that pass the DQ conditions indicated by SDQFLAGS are considered good for purposes of calculating statistics. - GOODMEAN: The mean of the good bins in counts per bin. For the

countsandfltfiles, a bin is an individual pixel, while forx1dfiles, a bin is a collapsed spectral column. - GOODMAX: The maximum of the good bins in the same units as the mean.

3.4.18 X1DCORR: Locate and Extract 1-D Spectrum

This module extracts a one-dimensional spectrum from the image of the spectrum on the detector.

- Reference files:

TWOZXTABorXTRACTAB,WCPTAB - Input files

flt,counts - Header keywords updated:

SP_LOC_[ABC],SP_OFF_[ABC],SP_NOM_[ABC],SP_SLP_[ABC],SP_HGT_[ABC] - Creates

x1dfiles

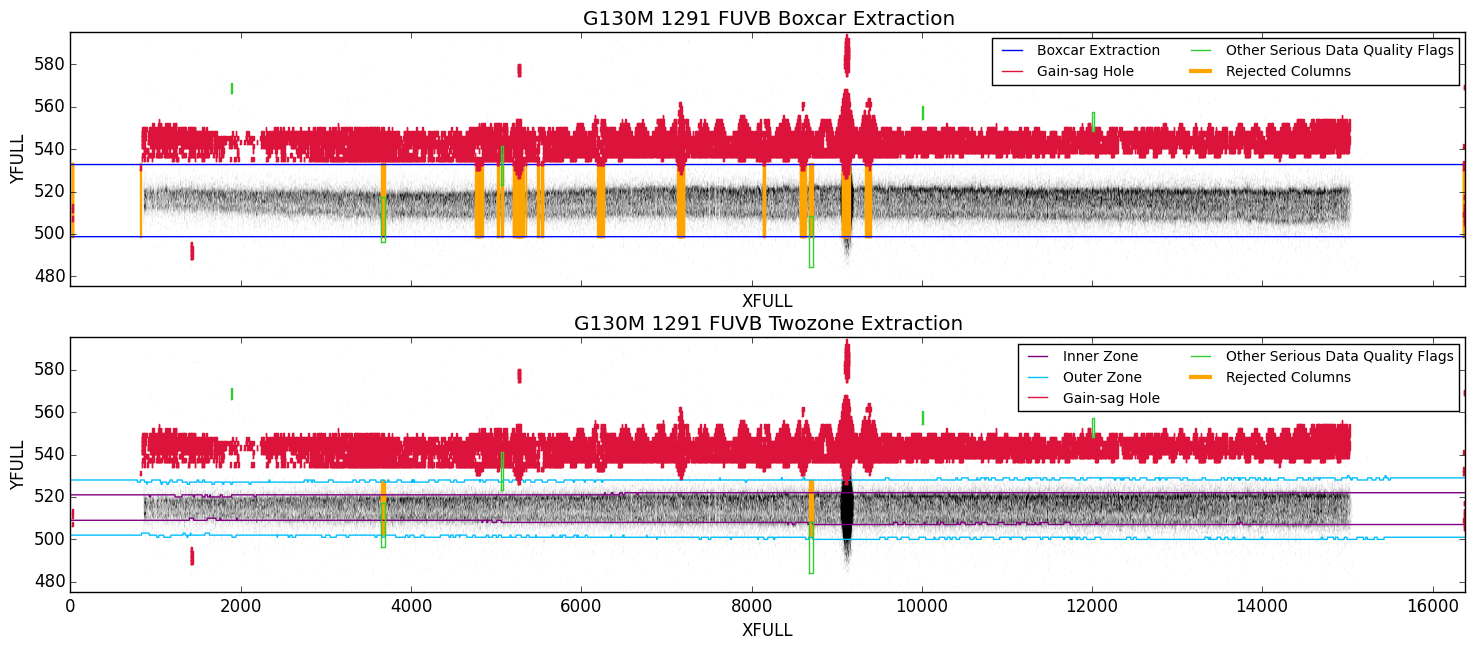

A 1-D spectrum and its error array are extracted from the flt and counts images by summing the counts in the cross-dispersion direction within a band centered on the spectrum. The data are not resampled in the dispersion direction. Wavelengths are assigned by evaluating a polynomial function (dispersion relation) in pixel coordinates. The background is subtracted (see BACKCORR; Section 3.4.19) to derive the net count rate, and the absolute flux is computed from the net count rate (see FLUXCORR; Section 3.4.20). With calcos version 3.0 or later, X1DCORR added support for the new TWOZONE extraction algorithm in addition to the older BOXCAR algorithm. The fundamental differences between these algorithms are the way the regions are chosen for the extraction of the data and the method of combining data quality flags in these regions. These differences are described below. Note, however, that the TWOZONE algorithm is designed to be used with the new TRCECORR and ALGNCORR steps, while for the BOXCAR, these steps should always be set to OMIT.

CASE WITH XTRCTALG=BOXCAR

When using the BOXCAR algorithm, the parameters controlling the extraction are taken from the XTRACTAB reference file described in Section 3.7.12 and Table 3.9.

The spectral extraction of a source is performed by collapsing the data within a parallelogram of height HEIGHT that is centered on a line whose slope and intercept are given by SLOPE and B_SPEC respectively. Similarly, two background spectra are determined by collapsing the data within parallelograms of height B_HGT1 and B_HGT2 centered on the lines defined by SLOPE and B_BKG1, and SLOPE and BKG2, respectively. The background spectra are then smoothed in the dispersion direction by a boxcar of width BWIDTH. These are then scaled and subtracted from the source spectrum.

CASE WITH XTRCTALG=TWOZONE

For the TWOZONE algorithm, the parameters controlling the extraction are taken from the TWOZXTAB reference file. The appropriate row from this table is selected based on the detector SEGMENT, OPT_ELEM, CENWAVE, and APERTURE.

The TWOZONE algorithm assumes that the spectrum has been straightened using the TRCECORR step of calcos and aligned with the appropriate reference profile by the ALGNCORR step.

When performing the TWOZONE extraction, calcos divides the spectral extraction region into two parts. One region, referred to as the "inner zone," defines the vertical extent of the region over which data quality flags are considered for inclusion in output data quality array, while the other, referred to as the "outer zone," defines the region over which events are summed to produce the GROSS flux and other vectors calculated from the flt and counts images (see Figure 3.14). Note that, despite its name, the outer zone will include the entire region included in the inner zone. The upper and lower boundaries of these zones vary as a function of position in the dispersion direction.

After the TWOZONE algorithm had been developed and implemented for the FUV, a region of transient elevated counts appeared (known as a "hotspot"). These events could seriously affect the extracted FUV flux if they are included whether they are in the inner or outer zone, so in calcos version 3.1 code was added to ensure that columns with DQ flags that match those in the SDQOUTER keyword are not included in any summed spectra.

The locations of these zone boundaries are calculated using the quantities HEIGHT, B_SPEC, LOWER_OUTER, UPPER_OUTER, LOWER_INNER and LOWER_OUTER taken from the TWOZXTAB reference file, together with the reference profile selected from the appropriate row of the PROFTAB reference file, which is selected based on the exposure SEGMENT, OPT_ELEM, CENWAVE and APERTURE. The reference profile, selected from the PROFTAB is truncated to HEIGHT number of rows centered at location B_SPEC. In each column of the profile, a cumulative sum of the profile is calculated and normalized to unity, so that it runs from 0 to 1 over the height of the profile. This cumulative sum is interpolated to find the locations at which the cumulative sum crosses the LOWER_OUTER, UPPER_OUTER, LOWER_INNER, and LOWER_OUTER boundaries. These interpolated boundaries are rounded outwards to integer pixel values, so the UPPER values are rounded up and the LOWER values rounded down. The final integer boundary values are then placed in the vectors Y_LOWER_OUTER, Y_UPPER_OUTER, Y_LOWER_INNER, and Y_LOWER_INNER, and these are be used to define the region of the flt and counts images over which the GROSS and NET are summed and over which the data quality values are combined.

The background region selection for the TWOZONE algorithm is done in a similar way as for the BOXCAR algorithm. The background per pixel is calculated for each column by dividing the total background counts by the number of background pixels. This value is subtracted from the intensity in every pixel in the science aperture.

Output arrays

This section provides the details of the spectral extraction process and the construction of the arrays that populate the x1d files. Table 3.1 lists these arrays along with others that are used to calculate them. Names listed in capital letters in this table correspond to columns in the x1d.fits files. Names given in lower case refer to temporary quantities used in the calculations that are not included in the output files, but which are used in the definition of some of the included quantities. The summed x1dsum[n] files are described in Section 3.4.22.

Table 3.1: Variables used in 1-D Spectral Extraction.

| Variable | Description |

|---|---|

SEGMENT | A string array listing the segments/stripes contained in the file |

NELEM | An integer listing the number of elements in the extracted arrays |

EXPTIME | The exposure times used for each segment, in double-precision format |

e[i] | Effective count rate, extracted from |

GROSS[i] | Gross count rate, extracted from |

GCOUNTS[i] | Gross counts |

BACKGROUND[i] | Smoothed background count rate, extracted from |

eps[i] | e[i] /GROSS[i] |

NET[i] | Net count rate = eps[i] (GROSS[i] – BACKGROUND[i]) |

ERROR[i] | Upper bound of internal error estimate |

| ERROR_LOWER[i] | Lower bound of internal error estimate |

| VARIANCE_FLAT[i] | Term used for calculating the internal error due to the flat-field error. |

| VARIANCE_COUNTS[i] | Term used for calculating the internal error due to the gross counts. |

| VARIANCE_BKG[i] | Term used for calculating the internal error due to the background counts. |

FLUX[i] | Calibrated flux |

WAVELENGTH[i] | Wavelength scale in Angstroms. |

DQ_WGT[i] | Weights array |

DQ | Bitwise OR of the DQ in the extraction region |

snr_ff | The value of keyword SNR_FF from the flat-field reference image |

extr_height | The number of pixels in the cross-dispersion direction that are added together for each pixel of the spectrum |

bkg_extr_heigh | The number of pixels in the cross-dispersion direction in each of the two background regions |

bkg_smooth | The number of pixels in the dispersion direction for boxcar-smoothing the background data |

bkg_norm | Float (extr_height) / (2.0*float (bkg_extr_height)) |

calcos 3.0 added the following new variables | |

DQ_ALL[i] | Data quality flags over the full extraction region |

NUM_EXTRACT_ROWS[i] | Number of extracted rows |

ACTUAL_EE[i] | Actual energy enclosed between outer zone boundaries |

Y_LOWER_OUTER[i] | Index of lower outer extraction zone boundary |

Y_LOWER_INNER[i] | Index of lower inner extraction aperture boundary |

Y_UPPER_INNER[i] | Index of upper inner extraction zone boundary |

Y_UPPER_OUTER[i] | Index of upper outer extraction zone boundary |

BACKGROUND_PER_PIXEL[i] | Average background per pixel |

lower_outer_value[i] | Fraction of flux enclosed at and below row Y_LOWER_OUTER |

lower_inner_value[i] | Fraction of flux enclosed at and below row Y_LOWER_INNER |

upper_inner_value[i] | Fraction of flux enclosed at and below row Y_UPPER_INNER |

upper_outer_value[i] | Fraction of flux enclosed at and below row Y_UPPER_OUTER |

Note: Variables beginning with a capital letter are saved in the output x1d file. An "[i]" represents array element i in the dispersion direction.

The columns in the x1d files are now described in more detail.

SEGMENT: A string array listing the segments/stripes contained in the file.

NELEM: An integer listing the number of elements in the extracted arrays.

EXPTIME: The exposure times used for each segment, (which can differ for FUV data), in double-precision format.

GROSS: The GROSS count rate spectrum is obtained from the counts file by summing over the extraction region. While, as described earlier in this section, the definition of the extraction region differs between the BOXCAR and TWOZONE algorithm, in each case the sum over each cross dispersion column runs from the Y_LOWER_OUTER to Y_UPPER_OUTER location listed in the x1d output table. These sums always include the endpoints.

GCOUNTS: This is simply the number of gross counts, or GROSS times EXPTIME.

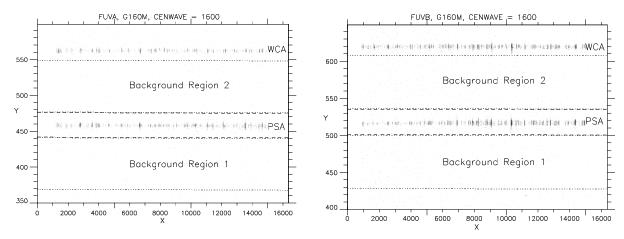

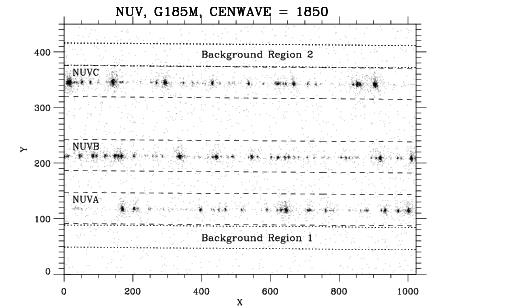

BACKGROUND: Two background regions are sampled on the counts array to obtain a mean background count rate spectrum. For FUV data, these are above and below the spectrum (see Figure 3.15). For NUV data they are above stripe C and below stripe A (Figure 3.16). The background regions are extracted in the same way as the spectrum. The values in the two background regions are added, boxcar-smoothed in the dispersion direction, and scaled by the sizes of their extraction regions before being subtracted from the science spectrum. Details of the background extractions are given in Section 3.4.19.

NET: The NET spectrum is the difference between the GROSS spectrum and a properly scaled BACKGROUND spectrum multiplied by an array which accounts for flat-field and dead-time effects. This array is eps[i] = e[i]/GROSS[i], where e[i] is an element in an array extracted from the flt file in exactly the same way as the GROSS spectrum is extracted from the counts file. Consequently, this factor corrects the NET spectrum for flat-field and dead-time effects. When the TWOZONE algorithm is used, an additional correction factor of 1/ACTUAL_EE is also applied to account for the actual enclosed energy fraction in each column.

ERROR and ERROR_LOWER: The ERROR and ERROR_LOWER arrays, which represent the upper and lower 1-sigma flux errors, are calculated via error propagation of the net count rate equation:

where Ni is the net count rate, ei is the inefficiency factor, GCi is the gross count rate, and BKi is the background count rate per wavelength bin (i). Propagating the various error terms leads to the final error equations:

where fu,l is the upper/lower "Frequentist-Confidence" Poisson error estimates from the astropy.stats.poisson_conf_interval package. The raw ERROR and ERROR_LOWER arrays involve elements from both the flt and counts files. The ERROR and ERROR_LOWER arrays contained in the _x1d files differ from the flt and counts files in the sense that the _x1d files have the absolute flux calibration applied (i.e., the net count rate errors are converted into flux errors; see Section 3.4.20).

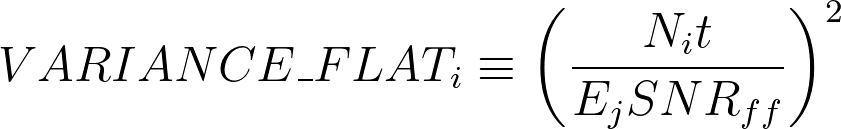

VARIANCE_FLAT: The first term from the ERROR and ERROR_LOWER equations that gives the contribution to the total flux error provided by the flat-field uncertainty. The value in the VARIANCE_FLAT field is calculated as:

where Ni is the net count rate and t is the exposure time. The product of the number of columns summed (Ej) and the S/N of the flat-field (SNRff) can be determined from simple arithmetic.

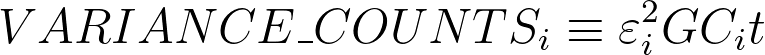

VARIANCE_COUNTS: The second term from the ERROR and ERROR_LOWER equations that gives the contribution to the total flux error provided by the gross count uncertainty. The value in the VARIANCE_COUNTS field is calculated as:

where ei is the inefficiency factor, GCi is the gross count rate, and t is the exposure time.

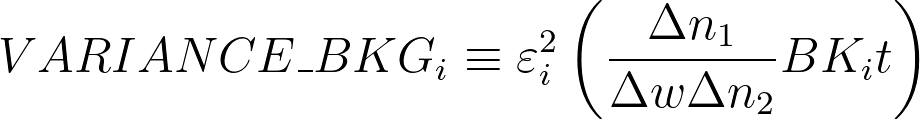

VARIANCE_BKG: The third term from the ERROR and ERROR_LOWER equations that gives the contribution to the total flux error provided by the smoothed background uncertainty. The value in the VARIANCE_BKG field is calculated as:

where ei is the inefficiency factor, BKi is the background count rate, and t is the exposure time. The additional term that includes the height and width of the background smoothing box can be solved for with simple arithmetic.

FLUX: The FLUX array in the x1d file is the NET spectrum corrected by the appropriate time dependent sensitivity curve. The details of this process are discussed in Section 3.4.20.

WAVELENGTH: As part of the spectral extraction, calcos assigns wavelengths to pixels in the extracted spectra using dispersion coefficients from the reference table DISPTAB. Wavelengths correspond to the center of each pixel. For each segment or stripe, grating, central wavelength, and aperture, the DISPTAB table contains the dispersion solution with respect to the template spectral lamp table that was used in the WAVECORR step. The dispersion solution has the following form:

| \mathrm{WAVELENGTH[i] = A_0 + A_1 x[i] + A_2 x[i]^2 + A_3 x[i]^3} |

where WAVELENGTH[i] is the wavelength in Angstroms, x[i] is the pixel coordinate in the dispersion direction, and Ai are the dispersion coefficients.

DQ_WGT: The DQ_WGT array has one point for each extracted point in the spectrum. It is 0 or 1 depending on whether the DQ for a given point is allowed according to the header keyword, SDQFLAGS. The SDQFLAGS value depends on the configuration of the instrument. These SDQFLAGS values set the DQ_WGT to 0 for events that are near the edge of the detector, dead spots, hot spots or outside the subarray (see Table 2.19). Otherwise, DQ_WGT = 1. The DQ_WGT array is used to construct the x1dsum file discussed in Section 3.4.22.

DQ: The DQ array in the x1d file is the bitwise OR of the members of the DQ array, contained in the third FITS extension of the counts file. For the BOXCAR extraction, this includes all of the points in the counts image that contribute to an element of the GROSS spectrum. Consequently, if anything is flagged within the extraction region, it is reflected in the x1d DQ array. For the TWOZONE extraction, the DQ flags in each column are only combined from Y_LOWER_INNER to Y_LOWER_OUTER. This causes DQ flags included only in the outer zone to be ignored, unless they are in the DQ value SDQOUTER from the primary header, in which case they are included in the value of DQ.

DQ_ALL: The DQ_ALL array gives the DQ value for the full outer zone extraction region in the case of TWOZONE extraction, otherwise it gives the same value as in the DQ array.

NUM_EXTRACT_ROWS: This gives the height of the extraction aperture as a function of column number. For BOXCAR extraction, this will be a constant equal to the height of the extraction region. For TWOZONE extraction, it will vary with column number/wavelength.

ACTUAL_EE: With BOXCAR extraction, this will be 1.0. With TWOZONE extraction, this will vary from column to column. Extraction is done on whole pixels, so while the outer boundaries are supposed to enclose the fraction of the flux specified by the difference between the LOWER_OUTER and UPPER_OUTER values taken from the TWOZXTAB, in practice the height equals Y_UPPER_OUTER-Y_LOWER_OUTER+1. The actual fraction of the encircled energy that is enclosed is reported in the ACTUAL_EE variable.

Y_LOWER_OUTER, Y_LOWER_INNER, Y_UPPER_INNER, Y_UPPER_OUTER: These variables give the row number of the boundaries defining the inner and outer regions. In the case of spectra extracted using the BOXCAR algorithm, the inner and outer indices are the same, and the indices follow the slant of the extraction aperture.

BACKGROUND_PER_PIXEL: This gives the smoothed background per pixel, i.e., the total background in the extraction aperture divided by the number of rows included in the background aperture for each column.

Finally, the SP_* KEYWORDS (listed in Table 2.15) provide useful information on the location of the spectrum in the cross-dispersion direction and the location where the spectrum is extracted. When XTRCALG=TWOZONE, ALGNCORR is set to PERFORM and the AGLNCORR task is used to set these keywords. See the description of the ALGNCORR section for a discussion of how these keywords are populated in that case. When ALGNCORR=OMIT, as is normally the case for XTRCTALG=BOXCAR, these values are set by the X1DCORR task as follows. The actual location of the spectrum is found from the flt file through a two step process. First, the image of the active area is collapsed along the dispersion direction to produce a mean cross-dispersion profile. Second, a quadratic is fit to a full-width-half-maximum-pixel region (with a minimum of 5 pixels) centered on the maximum of the profile. The difference between this value and the expected location, SP_NOM_A[B], is given as SP_OFF_A[B]. The actual location where the spectrum is extracted is given by SP_LOC_A[B]. For BOXCAR pipeline extractions, SP_LOC_A[B] = SP_NOM_A[B], and SP_OFF_A[B] is listed for informational purposes only. However, it is possible to override these values and extract a spectrum at the SP_OFF location or any other by using the stand alone version of x1dcorr discussed in Section 5.1.1.

3.4.19 BACKCORR: 1D Spectral Background Subtraction

This module determines the background contribution to the extracted spectrum and subtracts it.

- Reference file:

XTRACTABorTWOZXTAB - Input files:

flt,counts - Header keywords updated: none

The BACKCORR module computes the number of counts in the background regions, scales them by the ratio of sizes of the spectral extraction region to background regions, and then subtracts that value from the extracted spectrum at each wavelength. There are two background regions defined. For FUV data at LP3 and LP4, there is one above and one below the object spectrum (see Figure 3.15). For LP5 and later, please look at COS ISRs. For the NUV spectra, the two regions are above and below the three stripes (see Figure 3.16). Each background region is a parallelogram with the same slope used to define the object extraction region, but with different y-intercepts. The parameters of the background extraction region in the FUV are:

- HEIGHT: the full height (along the cross-dispersion) of the object extraction region in pixels

- BHEIGHT (for TWOZONE extraction) or B_HGT1 and B_HGT2 (for BOXCAR extraction): the full height (along the cross-dispersion) of the background regions in pixels. The upper and lower edges of each background region are defined as ±(BHEIGHT–1)/2 pixels from the line tracing the center of each region

- BWIDTH: the full width (along the dispersion) of the box-car average performed on the background

- B_BKG1: y-intercept of first background region

- B_BKG2: y-intercept of second background region

- SLOPE: the slope of the line tracing the centers of both the spectrum and background regions

The centers of background regions 1 and 2 in the cross-dispersion (Y) direction follow a linear function in the dispersion (X) direction according to the function:

| \mathrm{Y = mX +b} |

where m is the slope of the background (keyword SLOPE), and b is the Y-intercept of the background region (B_BKG1 or B_BKG2). At the i-th pixel along the dispersion direction (X) the background is computed by first summing all of the counts in pixels in the cross-dispersion within ±(BHEIGHT/2) of the central Y pixel of the background box. Data in flagged regions, as defined by the DQ flags, are ignored, and counts that occur during bad time intervals or that have out-of-bounds PHAs never make it to the counts file. If a flagged region does not cover the full height of a background region, the count rate in the non-flagged region will be scaled up to account for the omitted region.

Once the counts are summed for all X pixels, the result is averaged over ±BWIDTH/2 pixels along the dispersion direction. This gives a local average background (with known anomalous pixels such as dead spots or strong hot spots excluded). Both background regions are computed in this way, and then they are summed and divided by two to yield an average background rate. This average is then scaled to the number of pixels in the object extraction box by multiplying it by the factor "HEIGHT/(2*BHEIGHT)." The result is the background count rate BK[i] in Table 3.1, which is written to the BACKGROUND column in the x1d file. The background-subtracted count rate (corrected for flat field and dead time) is written to the NET column in the x1d table.

3.4.20 FLUXCORR/TDSCORR: Conversion to Flux

This module converts extracted spectrum into physical units, and allows for time dependencies in the conversion.

Reference files:

FLUXTAB,TDSTABInput file:

x1d- Header keywords updated: none

If FLUXCORR=PERFORM, FLUXCORR divides the NET and ERROR columns by the appropriate sensitivity curve read from the FLUXTAB reference table, which converts them to flux units (erg cm–2 s–1 Å–1). The NET divided by the sensitivity is written to the FLUX column of the x1d file, while the ERROR column is modified in-place.

The sensitivity curves read from the reference files are interpolated to the observed wavelengths before division. The flux calibration is only appropriate for point sources and has an option to accommodate time-dependent sensitivity corrections.

If TDSCORR=PERFORM, then the module TDSCORR will correct for temporal changes in the instrumental sensitivity relative to the reference time given by REF_TIME keyword in the FITS header of TDSTAB. TDSTAB provides the slopes and intercepts needed to construct a relative sensitivity curve. The curve for the epoch of the observation is determined by piecewise linear interpolation in time using the slopes and intercepts closest to the time of the observation. The sensitivity may be discontinuous at an endpoint of a time interval. Different piecewise linear functions may be specified for each of the wavelengths listed in the table. This process results in a relative sensitivity at the epoch of the observation, at the wavelengths given in the reference table. Interpolation between these wavelengths to the observed wavelength array is also accomplished by piecewise linear interpolation.

3.4.21 HELCORR: Correction to Heliocentric Reference Frame

This module converts the observed wavelengths to Heliocentric wavelengths.

- Reference file: none

- Input files:

rawtag,x1d - Header keywords updated:

V_HELIO

In addition to the Doppler smearing from HST orbital motion, the photons acquired during an observation are also Doppler shifted due to the orbital motion of the Earth around the Sun (V ~ 29.8 km/s). The sign and magnitude of the Doppler shift depend on the time of the observation as well as the coordinates of the target (i.e., the position of the target relative to the orbital plane of the Earth).

The HELCORR module in calcos transforms wavelengths of a spectrum to the heliocentric reference frame. It is applied to the extracted 1D spectrum during the operation of X1DCORR, by utilizing the keyword V_HELIO, which is the contribution of the Earth’s velocity around the Sun to the radial velocity of the target (increasing distance is positive), in km/s. It is computed by calcos and written to the science data header of the output corrtag file before spectral extraction is performed.

The shift at each wavelength is:

| \mathrm{λ_{Helio} = λ_{Obs} [1 – (V_{Helio}/c)]} |

where λHelio is the corrected wavelength (Å), c is the speed of light in km/s and λObs is the wavelength before the Heliocentric correction.

The velocity vector of the Earth is computed in the J2000 equatorial coordinate system, using derivatives of low precision formulae for the Sun’s coordinates in the Astronomical Almanac. The algorithm does not include Earth-Moon motion, Sun-barycenter motion, or the Earth-Sun light-time correction.

3.4.22 Finalization (making the x1dsum files)

Once the processing is complete, an x1d file is written for each spectroscopic exposure. This file includes spectra from both segments A and B for the FUV detector, and from all three stripes for the NUV detector. In addition, one or more x1dsum files are created. This is done even if only one spectrum was obtained.

The x1dsum files differ from the x1d files in one important respect. When an x1dsum file is created the DQ_WGT array (Section 2.7.2) is used to determine whether a point is good or bad. When only a single file contributes to the x1dsum file, if DQ_WGT = 0 for a pixel, then the counts, net and flux arrays for that point are set to zero. If the x1dsum or x1dsum<n> (for FP-POS observations) includes several x1d files (see Section 2.4.3), then, for each point in the spectrum, only those files with a DQ_WGT = 1 at that point are included (weighted by the individual exposure times), and the DQ_WGT array in the x1dsum file is updated to reflect the number of individual spectra which contributed to the point. If the updated value of DQ_WGT for a particular point is 0, then the value of the spectrum at that point is set to 0 in the x1dsum file.